Improve The Indexing Of Your Website’s Recently Updated Pages Using Fetch As GoogleBot In Google Webmaster Tools

Google has a lot of different ways to discover content on the web.

Googlebot (Google’s web spider) crawls billions of websites on a regular basis to crawl and chew textual content, images, videos and other document formats (e.g PDF, DOC) embedded in websites. This process can be extremely fast for popular sites who post a lot of unique content on a regular basis. Big media publications are indexed within 10 seconds while crawling a new story on this blog takes around a minute or so.

But how do you make sure Google’s crawler indexes your site’s recently updated pages in a matter of few seconds? How do you tell Google that there are a few pages on your site which needs immediate crawling and deeper indexing?

Google maintains a public page where anyone can request their site URL’s to be crawled and indexed. This page was built quite a while ago and it’s no longer considered a viable way to get your pages crawled and indexed faster.

The good news is that Google has recently introduced a better and faster way to index your website’s internal and recently updated pages. If you have a Google Webmasters tools account, you can fetch the pages and tell Google that these pages need immediate crawling.

Let Google Crawl Your Important Pages Really Fast

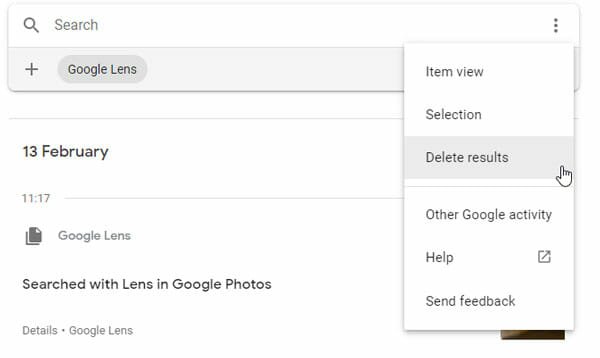

Login to your Google Webmasters tools account and go to the “Fetch as Googlebot” page. Add the page of your site which you want Google to index and click “Fetch”. When Google has successfully fetched the given page, you will see a link to submit that page in Google’s index

After submitting the URL to Google’s index, you have two options

1. Tell Google to index only this page

2. Tell Google to index this page and all the pages that are linked from the current page.

Here is the option which will be shown:

Generally, I would go with the first option but in case you have severely changed your site’s structure, you should go ahead and choose the “URL and all linked pages” option.

Google does not guarantee that they will index your pages and process all the requests right away. However, this method can improve the indexing of older pages which have been updated recently. You can test this feature with pages that have no backlinks and takes a long time to get crawled, normally.

When You Should Use The Fetch As GoogleBot Feature In Google Webmaster Tools?

There are a couple of situations when the fetch as Googlebot feature in Google webmaster tools can come in handy. Some examples:

1. You are live blogging an event and breaking a news story on a media event. Chances are that your competitor sites are also writing about the same story at that very moment, so it is important to get your page indexed faster and as early as possible.

2. You are running a contest on your site and the content of a page is changing every hour because of user generated content being added and deleted by moderators. If you want Google to rank your page for relevant queries, requesting a fresh crawl of that page can help.

3. Your site is fairly new and it has no backlinks. You want Google to crawl your site as fast as possible because you want to rank for some keywords which are time critical in nature.

4. You have added a few pages to a directory of your site which was previously disallowed using the robots.txt file. This directory is fairly deeper within your site’s architecture and you want to ping Googlebot and say “hey, here are a few pages on my site which is placed on a very deep level directory. Please crawl and index it, if you will”.

Please note that using Google’s fetch as Googlebot feature is not mandatory and should be done sparingly. Don’t go around and start fetching each and every page of your site, this wont help as far as indexing or rankings are concerned. You should use this feature only when a few pages require urgent indexing and better crawling.

If you are having issues with slow crawling, I highly recommend fixing the crawl errors first and ensure your content is crawlable. Fetching the page in Google Webmaster tools wont help repetitive crawling, if you have blocked the page using a robots.txt file or using a meta noindex tag in the head section.

Improve the Crawling And Indexing Of Your Website: Some Tips You Should Consider

If you want to improve the crawl rate of your website and ensure that your pages are indexed faster, here are a few tips you should know;

1. Create a Google Webmaster tools account and add your site to Google webmaster tools. Routinely monitor your Google webmaster tools account for crawl errors, site specific issues and redirections. Fix them as early as possible.

2. Create an XML sitemap of your website and submit it on your Google webmaster tools account. If possible, create a separate sitemap for Google news, videos and a different sitemap for images.

3. If your website does not offer an RSS feed, create one. Google uses RSS feeds for discovering new and fresh pages of your website.

4. Whenever you create a new page or publish a new blog post, tweet a link of the page on your Twitter account. If that page gets a fair amount of retweets, Google will notice it.

5. Use Pubsubhubbub on your site. It’s an asynchronous way to tell Google when you have new content waiting to be crawled and indexed.

6. Produce content on a daily basis. Google loves sources who produce original and interesting content daily.

7. Check your Robots.txt file and your meta tags used in the head section. Have you blocked specific pages of your site from getting crawled? Did you by mistake added a noindex,follow tag on any of the page?

8. Add a link of the new page to the homepage of your site. In general, the homepage of a site gets the maximum PageRank and link juice from external sites, so Google will frequently visit your homepage multiple times a day.

9. If you get a backlink to the page, it will be crawled right away. But be careful with this approach, don’t start spamming forums and blog directories to get backlinks for every other page of your site. This approach won’t help but hurt you in the longer run.

If you ask me, I would leave it up to Google to index the content of newer pages on this site. I would check the technical aspects and architecture of my site and won’t obsess about how frequently Google crawls the newer pages or recently updated posts of this blog.

Turns out they do a really fine job, this page got indexed within 1 minute.

Good advice. Does this work the same under Google’s new Panda format?